Discover how structured inputs & outputs transform Agent UX, making AI agents faster, accurate, and more reliable.

.png)

Natural language has become the new way we interact with software. The rise of chat-first interfaces proved that AI agents could understand intent and respond flexibly — a massive step forward from static GUIs. But here’s the catch: chat alone isn’t enough.

When users need to complete real workflows — from filing claims and reviewing analytics to approving budgets — text-only interfaces break down. That’s why the future of Agent UX depends on blending conversation with structured UI components.

Why Text-Only Interfaces Break in Real Agent Workflows

Chat is flexible but unstructured. This makes it brittle across both inputs and outputs.

Problems with Inputs

- Ambiguity:

Query: “Run a report for next week.”

→ Which dates exactly? Monday–Sunday? Calendar week? Fiscal week? Without a structured date picker, the agent either guesses wrong or interrupts flow with clarifying questions. - Missing fields:

Query: “Create a new campaign.”

→ Critical details like start date, budget, or target audience get skipped. The agent stalls mid-flow to ask again, forcing users to re-enter context already in their heads. - Invalid values:

Query: “Transfer $5O00 to account 1234.” (with an “O” instead of zero)

→ A tiny typo breaks the transaction. Text has no guardrails to catch or correct format errors before failure. - No constraints:

Query: “Assign this ticket to John.”

→ Which John? The system has three. Without a scoped dropdown or ID reference, the agent risks sending it to the wrong person. - Cognitive overload:

Query: “Book travel for next month to Berlin with budget approvals.”

→ Users have no guideposts for what’s required vs optional. They end up over-explaining, under-specifying, or going back and forth in frustrating loops.

Problems with Outputs

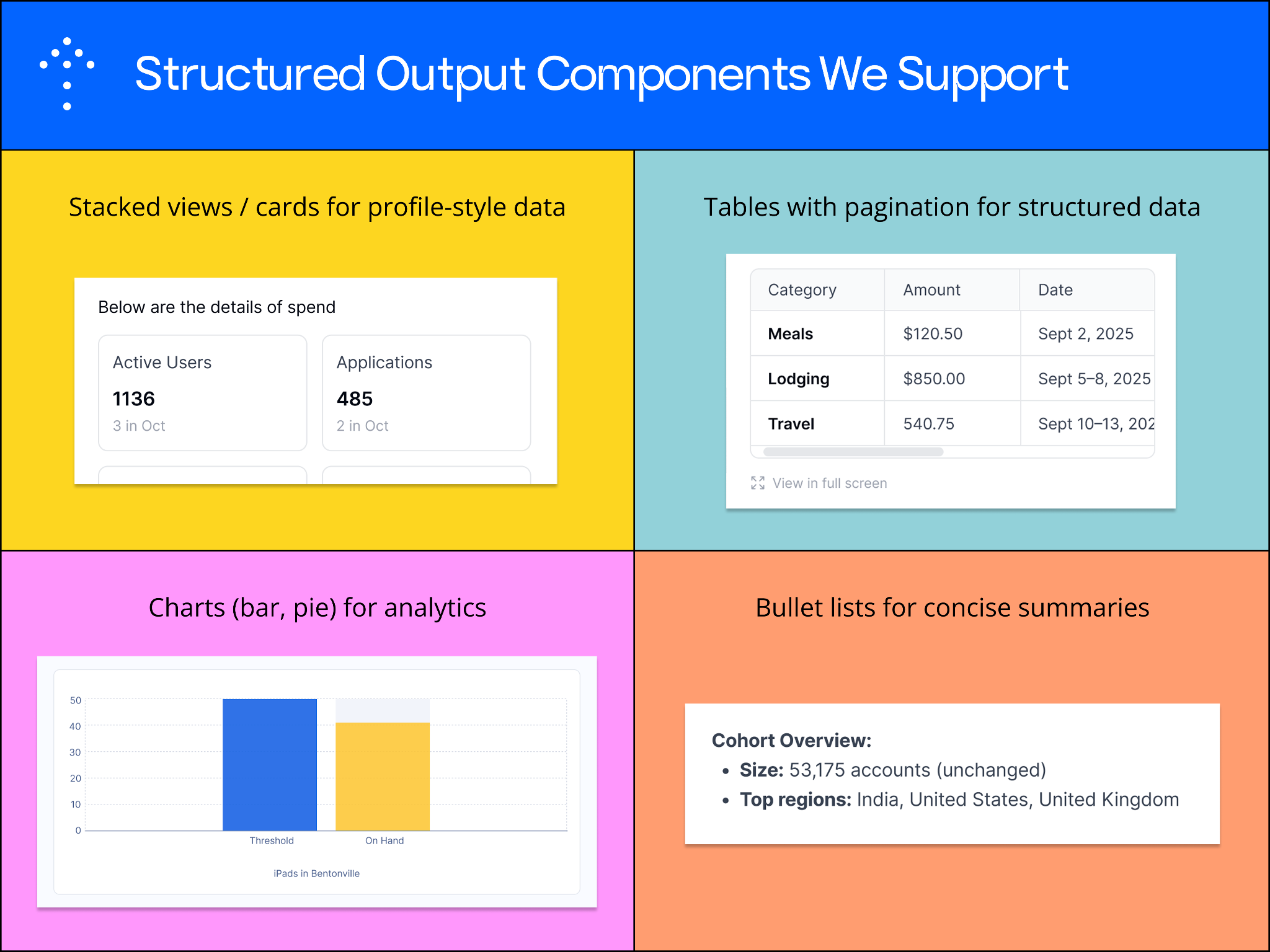

- Walls of text:

Workflow: asking an agent to “List all active deals by region.”

→ Instead of a table, the agent dumps paragraphs of names and numbers. Users scroll endlessly to parse simple comparisons. - Low actionability:

Workflow: “Show me this quarter’s churned customers.”

→ Text-only output means the user can’t filter by geography, click into an account, or export results. They’re left copy-pasting into a spreadsheet. - Inconsistency:

Workflow: asking for the same “pipeline summary” twice.

→ One time the agent formats it as bullets, the next as prose. Hard to scan, impossible to trust in downstream chaining. - Hard to chain:

Workflow: “Summarize last month’s support tickets, then create tasks for engineering follow-up.”

→ Because the output is free-form text, the agent can’t reliably parse it into actionable items for the next step. - No visualization:

Workflow: “Compare usage trends across three products.”

→ Chat responds with a list of numbers. Humans need to see a chart to spot deltas at a glance; instead they get math homework.

How Adopt AI Solves This: Structured Inputs and Outputs

The solution isn’t to abandon chat — it’s to augment it with structure. At Adopt AI, we’ve built a system where agents don’t just reply in text. They generate structured inputs and outputs, described in JSON, that map directly to UI components.

This means inputs are guided (no more typos, missing fields, or ambiguity), and outputs are actionable (tables, charts, lists — not just paragraphs). The result: workflows that are both conversational and interactive.

Example: Filing an Expense Reimbursement

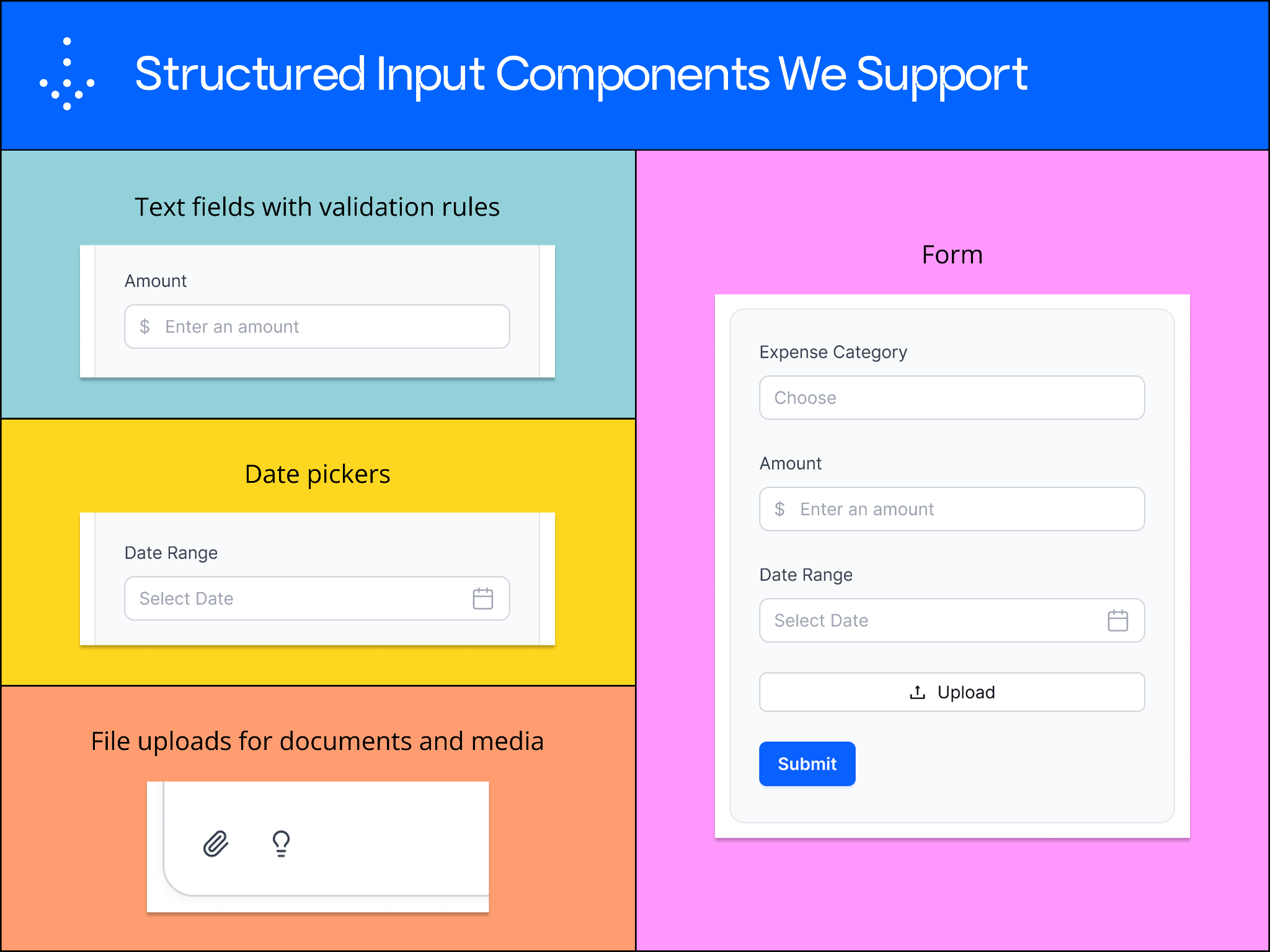

In a text-only flow, an agent would collect details one by one: expense category, amount, dates, receipts. The process is slow, repetitive, and error-prone — and uploading a file isn’t even possible through chat.

With Adopt’s structured IO:

- Expense Category is captured with a dropdown (Travel, Meals, Lodging, Other).

- Amount is entered via a validated text field (currency format).

- Date Range is selected using a date picker with correct formatting.

- Receipt is uploaded directly with a file upload button.

This makes the workflow both conversational and interactive — the agent can still guide the process, but structured components ensure accuracy and speed.

Here’s a video walkthrough of how this would work using Adopt’s Copilot Agent

The Schema Behind the Scenes

Here’s how the agent would actually describe this request:

{

"title": "Expense Reimbursement Details",

"form_data": [

{

"componentType": "dropdown",

"config": { "label": "Expense Category" },

"options": [

{"value": "travel", "label": "Travel"},

{"value": "meals", "label": "Meals"},

{"value": "lodging", "label": "Lodging"},

{"value": "other", "label": "Other"}

]

},

{

"componentType": "text",

"config": { "label": "Amount", "type": "currency", "required": true }

},

{

"componentType": "daterange",

"config": { "label": "Date Range", "required": true }

},

{

"componentType": "fileupload",

"config": {

"label": "Upload Receipt",

"acceptedTypes": [".pdf", ".jpg", ".png"],

"maxFiles": 1,

"required": true

}

}

]

}

The agent defines this schema; the interface renders automatically — no manual UI coding.

Structured Components Available in Adopt

Here are the key structured input and output components we’ve built to make agents reliable from day one:

Inputs - Dropdowns, Date Range Pickers, Text Fields with Validation. File Uploads

Outputs - Tables, Stacked Cards, Charts, Bullet Lists.

We’re continuously expanding this library, and customers can bring their own components too. Once added, they work across every agent you build in Adopt — and can even be used to power agents built outside Adopt.

Benefits of Structured Inputs & Outputs

Structured IO changes the game for both users and developers, making agents feel less like chatbots and more like real product experiences.

For Users

- Efficiency: Instead of endless back-and-forth, users complete workflows in a few guided steps. Structured components eliminate wasted turns.

- Accuracy: Validation rules, dropdowns, and constraints prevent errors before they happen — no more typos or wrong IDs derailing progress.

- Personalization: Agents can pre-fill fields with known context, saving time and reducing the need to re-enter obvious information.

- Flexibility: Conversations don’t vanish — users can still type naturally, but the agent knows when to switch into UI mode for precision.

- Clarity: Tables, charts, and summaries turn walls of text into visuals that are easy to scan, compare, and act on.

- Trust: When interactions feel structured and predictable, users gain confidence that the agent will act correctly and reliably.

For Developers

- No UI plumbing: Developers don’t need to hand-code interfaces for every workflow; agents emit schemas that render into components automatically.

- Consistency: The same primitives — dropdowns, tables, charts — power every workflow, so experiences stay uniform across the product.

- Reusability: Once a component is created, it can be reused across all agents, reducing duplication and speeding up delivery.

- Portability: Adopt’s IO layer isn’t locked in — developers can use the same structured components in agents built outside Adopt as well.

- Faster iteration: With schema-driven design, testing and debugging are quicker — changes can be made instantly without front-end rewrites.

Conclusion: The Future of Agent UX

Chat showed us the way, but it can’t carry agent experiences alone. The future of Agent UX isn’t chat versus UI — it’s chat plus UI. Agents that both talk and show will define the Agentic Era.

At Adopt, we’ve built the structured IO layer that makes this possible. Every agent you create can now combine conversation with guided inputs and actionable outputs — delivering faster, clearer, more trustworthy user experiences.

👉 If you’re designing agent UX, don’t stop at chat. Get in touch with us at Adopt to see how your app can deliver agent experiences that both talk and show.

Browse Similar Articles

Accelerate Your Agent Roadmap

Adopt gives you the complete infrastructure layer to build, test, deploy and monitor your app’s agents — all in one platform.

.png)

.png)

.png)

.svg)

.svg)