Understand multi-agent frameworks, their architecture, ecosystems, and how enterprises use them to accelerate AI workflows, automation, and decision.

TL;DR

- Multi-agent frameworks enable autonomous AI agents to collaborate on complex, distributed tasks, offering scalability and robustness vital for enterprises.

- Leading 2025 frameworks—, Ray, LangChain/LangGraph, CrewAI/AutoGen, MetaGPT, Semantic Kernel, and Agno cover diverse needs from simulation to AI orchestration and workflow automation.

- Adopt AI accelerates adoption with zero-shot API ingestion, workflow generation, no-code orchestration, and seamless integration with popular frameworks.

- Key challenges in multi-agent frameworks such as coordination, observability, lifecycle, security, and performance are effectively handled by Adopt AI’s advanced platform features.

- Future trends include context-aware self-organizing agents, human-in-the-loop systems, standardized protocols, and expanding ecosystems, with Adopt AI driving infrastructure innovation.

What if AI systems could work like teams collaborating, delegating, and reasoning together to solve problems too complex for any single model?

That’s the vision driving multi-agent frameworks — the next evolution in AI development.

Multi-agent frameworks equip developers with powerful building blocks: remote actors for parallel execution, message queues for seamless agent communication, persistent storage for maintaining state, and orchestrators to manage retries, failures, and scaling. Together, they enable the creation of robust, scalable AI systems capable of handling complex, multi-step workflows with precision.

Developer’s View: Building Scalable Agent Systems

Consider an e-commerce platform using a multi-agent framework to handle order processing:

- Remote Actors: Each agent handles a specific task, e.g., payment processing, inventory update, or shipment scheduling, running in parallel to reduce latency.

- Message Queues: Agents communicate asynchronously; when the payment agent succeeds, it sends a message to the inventory agent to update stock.

- Persistent Storage: The system logs each order’s status, ensuring no data is lost if an agent crashes or the system restarts.

- Orchestrators: Automatically retry failed tasks, scale agents during peak traffic, and manage dependencies between agents (e.g., shipment can only start after payment confirmation).

Outcome: Customers receive faster order processing, fewer errors, and a system that scales seamlessly during high-demand events like Black Friday.

In this article, we’ll dive into multi-agent frameworks and see how they’re changing the AI landscape. We’ll cover their core building blocks, the tools and platforms that make them practical, and how they help AI systems work together, scale, and tackle complex problems. Along the way, we’ll explore real-world patterns and architectures that developers can use to build robust, efficient AI systems.

Understanding Multi-Agent Frameworks

Multi-agent frameworks consist of systems where multiple autonomous agents interact within a shared environment to achieve shared or individual goals. These frameworks allow agents to work collaboratively or independently, depending on the task requirements and goals.

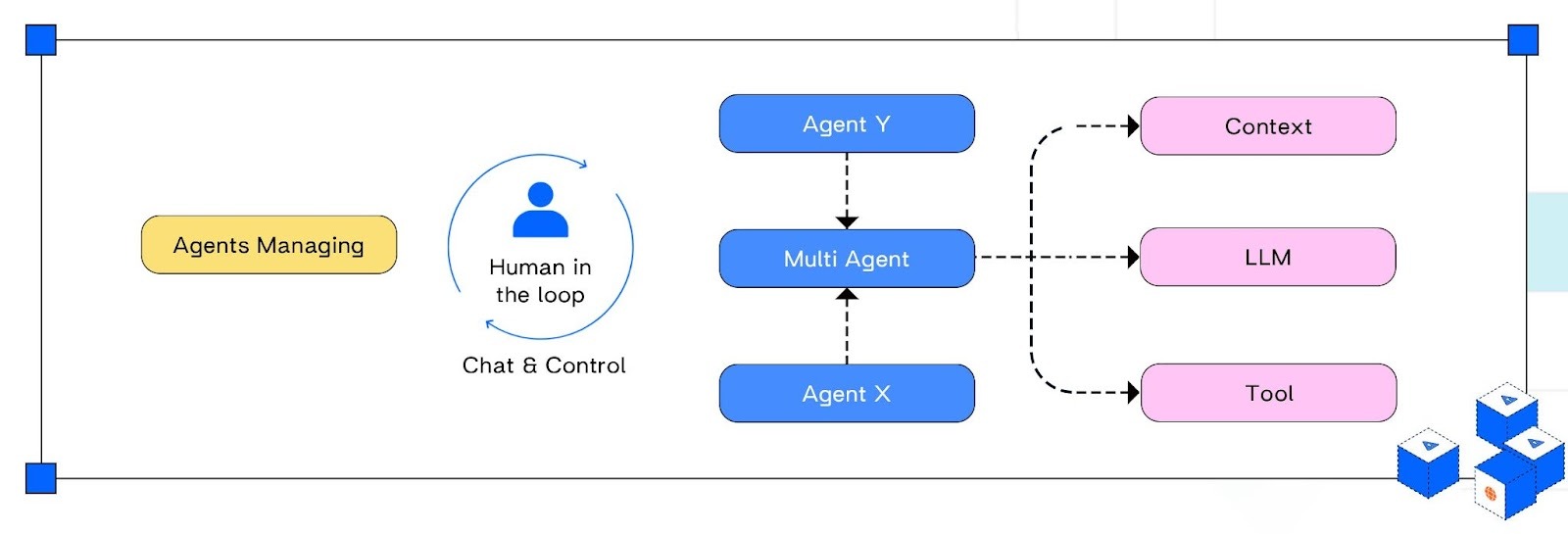

Core Components:

- Autonomous Agents

Independent entities (such as Agent X and Agent Y) capable of making decisions, learning, and acting without human intervention within a system. Each agent operates with defined responsibilities and can interact both with each other and with the environment to accomplish assigned tasks.

- Environment

The shared context for all agents, including data sources, APIs, tools, and language models (visualized as "Context," "LLM," and "Tool" in your diagram). This environment provides information, resources, and external systems that agents perceive and interact with to fulfill their objectives.

- Communication

Protocols and methods (depicted as interactions and chat/control flows) enabling agents to exchange information, coordinate activities, and share system state. Effective communication allows both agents and humans to collaboratively manage and debug agent workflows.

- Coordination & Cooperation

Strategies and mechanisms (as centralized around the "Multi Agent" system and "Agents Managing" roles) that align individual agent goals, facilitate efficient task division, and enable agents to work together and adapt as a collective to handle complex enterprise workflows.

Benefits of Multi-Agent Frameworks:

- Modular Specialization: Developers build isolated agents for specific tasks (e.g., fraud detection), allowing independent updates, testing, and deployment without impacting the whole system.

- Layered Orchestration: A hierarchical controller routes tasks among expert agents, improving efficiency, managing failures, and easing debugging by isolating issues.

- Event-Driven Communication: Agents exchange data asynchronously using pub/sub systems like Kafka, enhancing real-time responsiveness, decoupling components, and enabling independent scaling.

- Persistent State Management: Agents store workflow state in durable storage to support checkpointing and fault recovery, ensuring reliability in long-running enterprise processes.

Example: Financial institutions partition workflows like risk analysis, transaction validation, and compliance into specialized agents coordinated hierarchically. This architecture boosts throughput, reliability, and regulatory compliance while simplifying development

Architecture and Design Principles of Multi-Agent Frameworks

Architecture Walkthrough

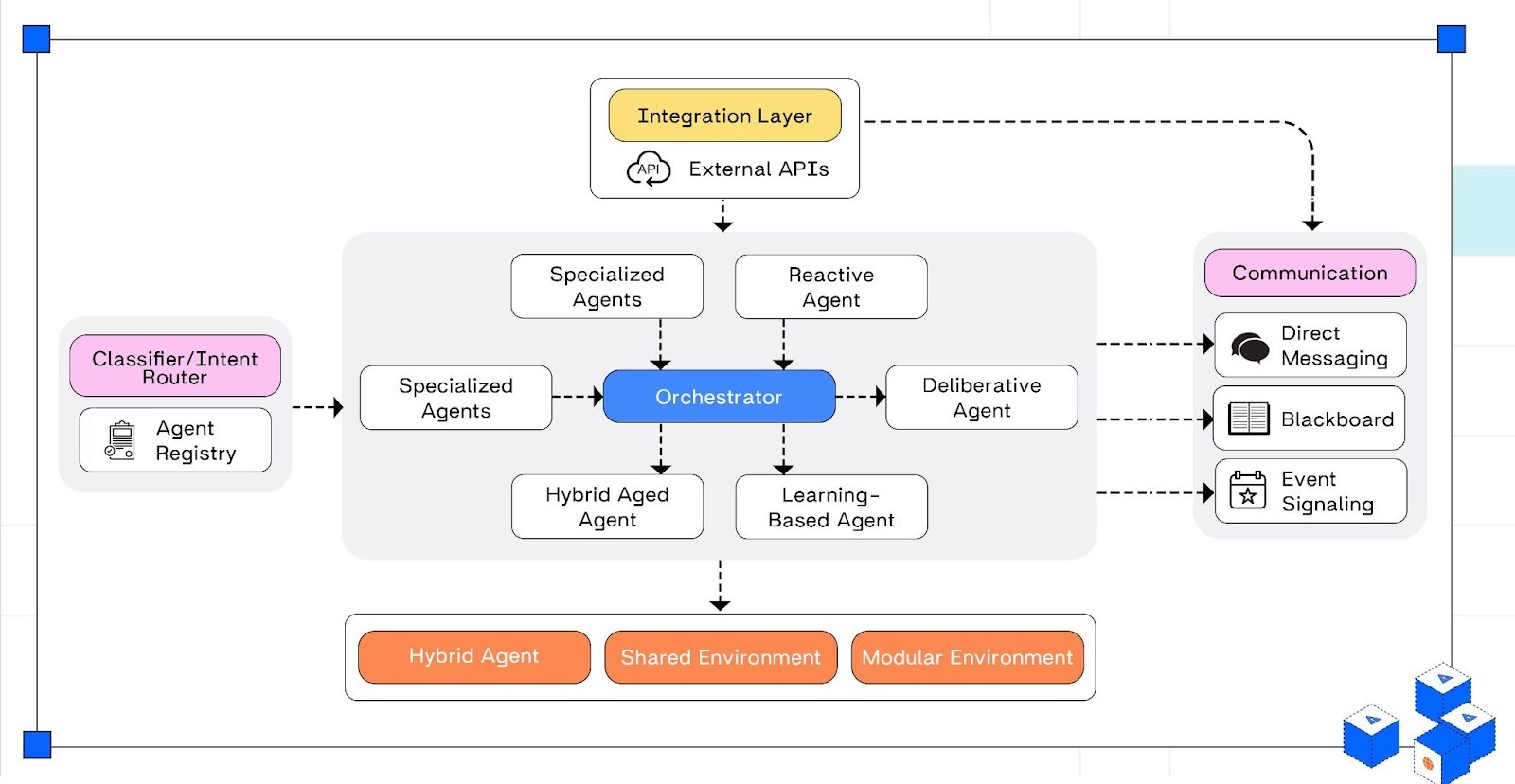

The diagram above illustrates how the core components of a multi-agent framework work together to enable scalable, adaptive, and intelligent agent collaboration across dynamic environments.

Orchestrator: "Acts as the central control unit, directing tasks to the appropriate agents based on incoming requests."

Agent Types: "Specialized agents, such as Reactive, Deliberative, Hybrid, Learning-Based, and Specialized agents, each handle specific tasks within the system."

Classifier/Intent Router & Agent Registry: "Classifies incoming tasks and routes them to the suitable agent, with a registry maintaining agent capabilities."

Communication Mechanisms: "Facilitates agent interactions through direct messaging, blackboard systems, and event-based signaling."

Integration Layer & Persistent State: "Connects external APIs and maintains session data for continuity."

Environment Design: "Supports both shared and modular environments for agent operations

Design Principles

The multi-agent framework incorporates several key design principles to ensure modularity, reliability, scalability, and adaptability:

- Modularity and Specialization: "Each agent is designed with a specific role, enhancing system flexibility and maintainability."

- Centralized Coordination with Distributed Execution: "A central orchestrator manages tasks, while agents operate autonomously."

- Dynamic Agent Discovery and Intent Routing: "Enables scalable and flexible system evolution."

- Robust Communication Protocols: "Multiple channels ensure efficient agent interactions."

- Context-Aware Environment Design: "Adapts to both shared and isolated operational contexts."

- Persistent State and Context Management: "Maintains continuity across interactions."

- Cloud-Native Deployment and Scalability: "Ensures elastic scaling and resilience."

- Performance-Oriented Engineering: "Focuses on metrics like collaboration success and efficiency

This design philosophy balances autonomy and coordination, modularity, and integration, laying a foundation for building powerful, resilient multi-agent AI systems that can tackle sophisticated and distributed challenges.

Performance Evaluation

Metrics Overview: Clearly define how performance is assessed:

- Collaboration Success: "Measures how effectively agents achieve collective objectives."

- Efficiency: "Assesses resource utilization and task completion times."

- Adaptability: "Evaluates the system's response to dynamic changes and failure recovery."

Technical Insights from Industry Examples

- Orchestrators: "Semantic Kernel unifies request management, context handling, and lifecycle control."

- Classifiers: "Leverage NLU and LLMs to direct inputs to appropriate agents."

- Registries: "Dynamically discover and validate agents for scalable evolution."

- Communication Protocols: "Frameworks may follow supervisor, hierarchical, or networked models."

- Cloud-Native Deployments: "Use container orchestration (e.g., Kubernetes) to achieve scalability and resilience."

Practical Example: Multi-Agent Framework in Python

For a hands-on illustration of these principles, examine this simplified Python multi-agent system, broken down into essential sections for clarity

Shared Environment Setup

The system uses a shared environment (blackboard) where agents collaboratively read and write data.

python

environment = {"topic": "AI Agent Frameworks", "facts": [], "report": ""}

Explanation: This data structure simulates a workspace where agents exchange knowledge and build a collective report.

Defining Agents

Agents are defined as threads with different roles, representing reactive, deliberative, hybrid, and learning-based behaviors.

Python

class Agent(threading.Thread):

...

def process(self, msg):

if self.role == "Reactive":

self.environment["facts"].append("Reactive agent observed event.")

elif self.role == "Deliberative":

Explanation: Each agent behaves independently, updating the shared environment based on its reasoning type and reporting status to an orchestrator.

Orchestrator: Centralized Coordination

The orchestrator manages the agents, distributes tasks, collects progress, and consolidates final results.

python

class Orchestrator:

def notify(self, agent_name, status):

def finish(self):

Explanation: This central controller ensures smooth task flow, monitors agent completion, and triggers shutdown once all tasks are done.

Running the Multi-Agent System

Agents are instantiated with specific roles and started under the orchestrator’s control.

python

orc = Orchestrator(environment)

orc.add_agent("A1", "Reactive")

orc.add_agent("A2", "Deliberative")

...

orc.start()

Explanation: This segment initializes a team of specialized agents and runs the coordinated workflow until all agents complete their tasks, finally outputting the combined report.

Overview

This example demonstrates how design principles translate into working components: agents with distinct reasoning, centralized coordination, asynchronous communication, and shared environmental data. Developers can extend this template with more complex logic, communication protocols, and distributed setups aligned with industrial multi-agent frameworks.

With the basics of multi-agent frameworks understood, let’s explore the leading platforms that offer diverse, scalable solutions for building advanced multi-agent AI systems

Orchestrating Intelligence: Top Multi-Agent Frameworks to Watch in 2025

As of 2025, the ecosystem of multi-agent AI frameworks has matured with several prominent platforms catering to diverse developer needs and use cases. Here's an in-depth look at leading frameworks shaping multi-agent systems today.

CrewAI

CrewAI specializes in role-driven multi-agent orchestration, enabling multiple agents with clearly defined responsibilities to collaborate efficiently. It is beginner-friendly and practical for teamwork-oriented workflows.

- Strengths: Role-driven multi-agent orchestration, beginner-friendly, practical for collaboration.

- Ideal Use Cases: Workflow automation, structured team-like agent collaborations.

Setup & Basic Steps:

- Follow setup guides on the official CrewAI website.

- Use the visual designer or REST APIs to create agents, assign roles, and build workflows.

- Test workflows within the platform’s simulation environment.

- Deploy to cloud or on-premises, scaling workflows according to enterprise needs.

Resources: CrewAI Official Website

Pros:

- Clear role-driven architecture simplifies task delegation and debugging.

- Supports sequential and hierarchical workflows for predictable automation.

- Strong integration with popular LLMs and enterprise APIs.

- Offers no-code tools plus deep customization with Python SDK.

- Real-time observability tools aid tracing and debugging.

- Enterprise-ready with scalable deployment and permission controls.

Cons:

- Limited support for dynamic, non-linear agent interactions or conversational loops.

- Less out-of-the-box observability compared to some competitors.

- UI and debugging tools can feel black-boxed, reducing transparency.

- Best suited for structured workflows; less flexible for exploratory AI research.

- Some learning curves for advanced scripting and integration.

How This Works in Practice Inside an Enterprise Deployment: CrewAI

CrewAI helps Fortune 500 companies automate complex workflows across teams. For example, DocuSign used CrewAI agents to streamline lead data consolidation, speeding up sales processes. PwC improved code-generation accuracy significantly by leveraging CrewAI’s role-driven multi-agent workflows, reducing turn around times and boosting efficiency.

AutoGen

AutoGen supports event-driven asynchronous multi-agent conversations with human-in-the-loop support, designed for enterprise-level scalability and real-time interactions.

- Strengths: Event-driven asynchronous multi-agent conversations, human-in-the-loop support, enterprise-focused.

- Ideal Use Cases: Dynamic collaborative AI systems and real-time multi-agent concurrency.

Adopted in dynamic collaborative AI systems requiring real-time multi-agent concurrency and human oversight, such as live coding assistants, intelligent meeting facilitators, and adaptive workflow engines in large enterprises.

Setup & Basic Steps:

- Follow setup guides provided on their GitHub pages or websites.

- Use their visual tools or APIs to define roles and workflows.

- Deploy in cloud or on-prem environments, customizing for your enterprise scale.

Resources: AutoGen Documentation

Pros

- Event-driven, async architecture for real-time, scalable multi-agent workflows

- Human-in-the-loop checkpoints for controlled agent behavior

- Enterprise-ready orchestration with strong fault tolerance

- Flexible agent roles for complex collaboration

- Open-source and extensible with custom integration support

Cons

- Requires strong distributed systems and concurrency expertise

- Steep learning curve for new developers

- Minimal default UI—needs custom monitoring tools

- Smaller community and ecosystem

- Complex setup and ops at enterprise scale

How This Works in Practice Inside an Enterprise Deployment: AutoGen

AutoGen is utilized by enterprises to power intelligent meeting facilitators that automate note-taking, summarization, and task assignments during live meetings. It also supports asynchronous multi-agent workflows in live coding assistants, where agents collaborate and refine code with human feedback in real time. These applications showcase AutoGen’s strength in orchestrating dynamic, event-driven multi-agent conversations with human-in-the-loop scalability.

MetaGPT

MetaGPT is a multi-agent framework that simulates a complete software company by assigning specialized roles (Product Manager, Architect, Engineer, QA) to AI agents. It transforms natural language requirements into comprehensive software projects with code, documentation, and tests.

Key Strengths: Role-based collaboration, standardized operating procedures (SOPs), end-to-end automation.

Ideal Use Cases: Automated software development, rapid prototyping, MVP creation, and enterprise workflow automation. MetaGPT has been used to generate complete applications, automate coding tasks, and streamline software development processes from requirements to deployment.

MetaGPT (Python)

Setup & Basic Steps:

Install Python 3.9+.

Install MetaGPT via pip:

bash

pip install metagpt

Configure API keys for OpenAI or other LLM providers.

Define roles and requirements for your software project.

Run the multi-agent system to generate complete software solutions.

Sample Snippet:

python

from metagpt.software_company import SoftwareCompany

from metagpt.roles import ProductManager, Architect, Engineer

company = SoftwareCompany()

company.hire([ProductManager(), Architect(), Engineer()])

company.run_project("Build a task management web app")

Resources: Official Documentation

Pros

- Role-based multi-agent architecture mimicking real software development teams.

- Generates complete software projects including code, tests, and documentation automatically.

- Integrates Standard Operating Procedures (SOPs) to ensure consistent, high-quality outputs.

- Achieves state-of-the-art performance on coding benchmarks (85.9% on HumanEval).

- Supports various LLM providers and can be customized for specific development workflows.

Cons

- Requires LLM API access which can be expensive for large projects.

- Generated code quality depends heavily on the underlying LLM capabilities.

- Limited to software development use cases, not suitable for general multi-agent applications.

- Still developing; some advanced enterprise features may be incomplete.

How This Works in Practice Inside an Enterprise Deployment:MetaGpt

MetaGPT powers enterprise software development by automating routine coding tasks and accelerating MVP creation. Companies use it to transform product requirements into working prototypes, generate boilerplate code, and maintain consistent development standards across teams. Its role-based approach ensures comprehensive software deliverables while reducing development time from weeks to hours.

Semantic Kernel

Semantic Kernel is a lightweight, open-source SDK from Microsoft designed to build AI agents and multi-agent systems that integrate large language models (LLMs) with native code. It supports C#, Python, and Java, providing a modular and extensible middleware that simplifies connecting AI models with existing code and workflows.

Key Strengths

- Minimal, modular architecture lets developers rapidly build AI agents combining prompts, functions, APIs, and plugins.

- Supports multi-agent collaboration with agents that can communicate, share data, and coordinate tasks in real time.

- Human-in-the-loop integrations enable controlled decision-making and approvals.

- Enterprise-ready with telemetry, security hooks, and robust lifecycle management.

- Model-agnostic—easily switch or integrate various AI models like OpenAI, Azure OpenAI, and Hugging Face.

- Strong .NET ecosystem integration along with Python and Java support.

Ideal Use Cases

- Building conversational AI agents, chatbots, and personal assistants that route tasks, maintain memory, and call external plugins.

- Automating document processing and knowledge retrieval with embeddings and vector databases.

- Developing AI copilots embedded into business tools like CRMs or ERP dashboards.

- Coordinating autonomous multi-agent workflows that involve task delegation and complex orchestration.

Setup & Basic Steps

- Install the Semantic Kernel SDK for your language (Python, C#, Java).

- Define AI agents by composing plugins, prompts, and skills that can call external functions.

- Use the Kernel as a dependency injection container to orchestrate calling AI models and application functions.

- Enable multi-agent collaboration and messaging for distributed workflows.

- Integrate human-in-the-loop steps for a=]\pprovals and input where needed.

Pros

- Modular and composable with minimal overhead, facilitating rapid AI agent prototyping and deployment.

- Multi-agent orchestration with collaborative, distributed intelligence capabilities.

- Strong enterprise focus with observability, security, and responsible AI support.

- Cross-language support broadens developer accessibility.

Cons

- Steeper learning curve due to unique architectural patterns and plugin system.

- Smaller community and ecosystem compared to more mature frameworks.

- Requires keeping pace with rapidly evolving AI models and APIs.

- Documentation and resources are good but less extensive than some competitors.

Enterprise Practice

Semantic Kernel excels at embedding AI agents into complex enterprise workflows where contextual reasoning, memory, and orchestration across multiple AI services and legacy systems matter. It allows automating nuanced business processes—like incident response or compliance checking—by combining AI reasoning with real-time data, integrated tools, and human oversight, all while maintaining robustness and auditability.

Ray

Ray is a distributed computing platform optimized for large-scale AI workloads. While not exclusively an agent framework, Ray supports building scalable multi-agent reinforcement learning and distributed AI applications.

- Key Strengths: High performance, excellent scalability, support for parallel execution.

- Ideal Use Cases: Commonly used in applications requiring large-scale distributed machine learning, reinforcement learning, and multi-agent system training, such as autonomous vehicles, recommendation engines, and fraud detection.

Ray (Python)

Setup & Basic Steps:

- Install Ray:

bash

pip install ray

- Initialize Ray in your script:

python

import ray

ray.init()

- Define remote actors for multi-agent behaviors:

python

@ray.remote

class MyAgent:

def act(self):

return "Acting"

- Run multiple agents in parallel, leveraging Ray’s distributed infrastructure.

Resources: Ray Tutorials

Pros

- Runs parallel multi-agent workloads efficiently using Ray Core tasks and actors.

- Scales easily from local machines to clusters with Ray autoscaler and Kubernetes operator.

- Supports reinforcement learning and generative AI with RLlib and integrates with popular ML frameworks.

- The rich ecosystem includes Ray Serve for model deployment, Ray Tune for hyperparameter tuning, and debugging tools.

- Backed by a strong open-source community and commercial support from Anyscale.

Cons

- Steep learning curve mainly around Ray Core primitives like tasks and actors.

- Managing clusters with tools such as Ray Cluster Launcher or Kubernetes Operator requires infrastructure expertise.

- No built-in high-level coordination tools; developers build communication logic using Ray Actors or external messaging.

- Primarily Python-centric; libraries like RLlib and Ray Serve focus on Python, making multi-language support harder.

How This Works in Practice Inside an Enterprise Deployment

Ray powers Uber's autonomous vehicle fleet simulation, enabling rapid reinforcement learning across thousands of simulated agents to improve real-time decision-making capabilities. Its scalability and parallelism allow training complex models efficiently in highly dynamic environments.

LangChain & LangGraph

LangChain is an LLM orchestration framework known for modularity, integrating language models with external tools, memory, and APIs. LangGraph builds upon LangChain, adding graph-based orchestration for managing complex, stateful, and branching workflows suited for agent coordination.

LangChain

Basic Setup

bash

pip install langchain

Build Simple Chain

python

from langchain import LLMChain

from langchain.chat_models import ChatOpenAI

# Example: Chat with OpenAI GPT

llm = ChatOpenAI(model="gpt-3.5-turbo")

chain = LLMChain(llm=llm, prompt="Ask the AI about multi-agent systems.")

response = chain.run()

print(response)

Key Strengths

- Extensive ecosystem, strong memory handling, modular and flexible.

Ideal Use Cases

- LangChain excels in conversational agents and reasoning workflows with mostly linear or simple branching chains.

Pros:

- Extensive, mature ecosystem with a wide range of integrations for language models, memory, and external APIs.

- Highly modular and flexible, enabling rapid development of custom workflows and agents.

- Strong memory and context handling for improved conversational and reasoning capabilities.

- Good community support and ongoing active development.

Cons:

- Primarily focused on chain-based workflows, which may limit handling of complex, stateful, branching multi-agent processes.

- Requires careful management for scaling and robustness in production.

- Some learning curve due to many modular components and integrations.

How This Works in Practice Inside an Enterprise Deployment: LangChain

LangChain is used by AI companies to build advanced AI copilots that assist developers in writing, refactoring, and documenting code. For instance, Replit leverages LangChain to power their AI-enabled code completion and debugging assistants, helping millions of users write code faster with less friction. LangChain’s modular integrations enable seamless connection to databases, external APIs, and custom toolsets to enhance LLM capabilities.

Constructing Complex Workflows with LangGraph

python

from langchain import langgraph

# Build nodes

node1 = langgraph.Node(name="FetchData", function=fetch_external_api)

node2 = langgraph.Node(name="ProcessData", function=process_data)

node3 = langgraph.Node(name="Respond", function=generate_response)

# Connect nodes in a graph

graph = langgraph.Graph(nodes=[node1, node2, node3], edges=[(node1, node2), (node2, node3)])

# Execute the graph

result = graph.run()

External API Integration

Leverage built-in or custom components to connect to APIs such as CRM, ticketing, or other enterprise tools.

python

from langchain.tools import APIConnector

crm_api = APIConnector(endpoint="https://crm.example.com/api")

response = crm_api.query("Get customer data")

Resources for Learning & Development

- LangChain Official Documentation

- Sample Projects & Tutorials on GitHub

- Community Forums and Slack Channels for real-time support

Key Strengths

- Explicit graph control, better error recovery, state management.

Ideal Use Cases

- LangChain excels in conversational agents and reasoning workflows with mostly linear or simple branching chains.

LangGraph

Pros:

- Adds graph-based orchestration for managing stateful, branching and complex multi-agent workflows.

- Explicit control over workflow graphs and better error handling and recovery.

- Designed for scalability and long-lived, stateful agent interactions.

- Built-in support for human-in-the-loop moderation and debugging.

Cons:

- More complex model that may require deeper understanding to implement effectively.

- Less mature than LangChain with a smaller community and ecosystem as of now.

- Proprietary platform options can add cost and deployment complexity.

Real-World Example: LangGraph

LangGraph is employed by enterprises like Ally Tech Labs to orchestrate complex multi-agent workflows involving human-in-the-loop moderation. Their solution uses LangGraph’s stateful graph-based orchestration to manage dynamic customer-facing AI agents that draft responses for review and improve over time through continuous evaluation. This application involves long-running, branching workflows where human agents collaborate with AI agents to deliver reliable and high-quality outcomes.

Agno

Agno is a high-performance, open-source Python framework designed to build and manage multi-agent AI systems with modular components like tools, memory, and reasoning capabilities. Agno emphasizes speed, composability, and developer productivity, offering an agent runtime (AgentOS) that includes a pre-built FastAPI app and a web UI for real-time orchestration, debugging, and deployment right out of the box.

Key Strengths:

- Minimal setup for rapid agent creation with Python classes, enabling custom agents to integrate models, external tools, and memory stores.

- Supports multi-modal inputs and outputs (text, audio, images), facilitating diverse AI workflows.

- Model-agnostic architecture allows seamless interoperability with popular LLM providers (OpenAI, Anthropic, Groq).

- Private, cloud-native runtime ensures data never leaves your environment, ideal for enterprise security.

- Built-in session, state, and human-in-the-loop management simplify complex workflows.

Ideal Use Cases:

- Teams building collaborative multi-agent workflows for market research, automation, or real-time decision-making.

- Production-ready AI assistants that require transparency, auditability, and extendibility.

Setup & Basic Steps:

- Install Agno via pip, define agents in Python inheriting from base Agent classes.

- Compose agents declaratively with configurable models, memories, tools, and knowledge bases.

- Run and serve agents using the AgentOS FastAPI runtime and access the web UI for workflow control.

Sample Code:

python

from agno.agent import Agent

from agno.models.anthropic import Claude

agent = Agent(

model=Claude(id="claude-sonnet-4-5"),

tools=[],

markdown=True,

)

agent.print_response("Hello from Agno agent!", stream=True)

Pros:

- Highly modular, extensible Pythonic API minimizing boilerplate.

- Runtime and UI tooling included for smooth development and production deployment.

- Supports complex agent orchestration with high speed and low latency.

- Enterprise-ready security with full local control.

Cons:

- Newer framework with smaller community compared to legacy platforms.

- Requires Python expertise; no native support for Java environments.

- Advanced features might have a learning curve.

How This Works in Practice Inside an Enterprise Deployment

Agno powers teams building AI multi-agent workflows for market intelligence, combining autonomous reasoning with integrated tools and knowledge management—all managed via a flexible, fast runtime.

Selecting the Appropriate Multi-Agent AI Framework

Choosing the right multi-agent framework depends on your project requirements, team expertise, and workflow complexity. The table below highlights the key characteristics, ideal use cases, and target users for each framework:

Framework Selection in 2025

Enterprise Applications of Multi-Agent Frameworks

Key Industry Use Cases

- Customer Support Automation

Multi-agent frameworks enable agents to manage customer triage, respond to inquiries, and handle feedback loops efficiently. This reduces resolution times and improves customer satisfaction by automating and personalizing interactions. - Sales and Marketing

Intelligent agents optimize lead nurturing and outreach campaigns by segmenting audiences, personalizing communications, scheduling follow-ups, and analyzing campaign effectiveness for continuous improvement. - IT & Operations

Agents automate issue detection, resolution workflows, infrastructure monitoring, and task coordination, enhancing operational reliability and minimizing downtime through proactive response mechanisms.

Cross-Framework Collaboration

Hybrid use of leading frameworks, such as Ray for scalable distributed AI workloads combined with LangGraph for sophisticated workflow orchestration, is increasingly common. Platforms like Adopt AI facilitate seamless integration and orchestration across these disparate frameworks, enabling enterprises to leverage the unique benefits of each while maintaining centralized control.

Business Impact

- Faster time-to-value through accelerated development and deployment of multi-agent workflows.

- Reduced engineering workload by automating integration, testing, and orchestration processes.

- Enhanced operational efficiency and improved decision-making via real-time agent collaboration and data-driven insights.

- Improved scalability and user experience by dynamically orchestrating agents to handle varying workloads with reliability.

Challenges in Multi-Agent Framework Implementation

1. Coordination Chaos

Imagine ten autonomous agents each thinking they’re handling the right task, but in reality, duplicating efforts or conflicting with one another. For example, a “Retriever” agent fetching data already cached by a “Summarizer” means double the work and wasted resources.

How engineers fix it: Introduce an orchestrator or intent router to assign clear ownership and priority for tasks upfront—much like service mesh routing, but for AI agents.

2. Debugging Distributed Decisions

Logs look like a dozen people chatting simultaneously in different Slack channels. Agents run asynchronously, messages arrive late or not at all, and tracing failures feels like piecing together a fractured conversation.

Effective debugging: Treat each agent message as a traceable event with identifiers and context stored in event logs or vector databases, enabling observability that saves teams in critical production moments.

3. Lifecycle and Version Drift

Deploying a new agent version without synchronizing others leads to surprising failures agents might misinterpret shared context or data formats, breaking workflows.

Engineer strategy: Maintain a versioned agent registry and use CI/CD pipelines for coordinated rollouts, ensuring all agents speak the same “language” and enabling quick rollbacks when needed.

4. Security & Compliance Hassles

Ensuring encrypted messaging, strict access controls, and audit trails in multi-agent communications is non-negotiable but complicated and essential in regulated sectors to maintain trust and compliance.

5. Performance Blindspots

Measuring real-time success and resource usage across a swarm of agents is tricky without specialized monitoring tools tailored to multi-agent concurrency and stateful workflows.

Why Multi-Agent Systems Are Powerful but Hard to Scale

Multi-agent systems let you break complex workflows into specialized, collaborating agents. This yields modularity, parallelism, and emergent intelligence. But scaling them is tough: coordinating many agents, gluing APIs and tools, managing shared state, and enforcing governance all become engineering bottlenecks.

Rethinking the Multi-Agent Infrastructure Layer

You need more than agents you need a scaffolding layer that handles:

- Dynamic wiring and orchestration

- Tool & API integration

- Context sharing, memory, and data flow

- Security, auditability, and governance

- Agent lifecycle, autoscaling, and error handling

This is the layer below the agent frameworks you already use (LangGraph, CrewAI, etc.).

Adopt AI: Automating the Plumbing, So You Focus on Logic

Adopt AI sits under your multi-agent logic and automates infrastructure:

- ZAPI (Zero-Shot API Ingestion): Instantly wrap external APIs as agent tools.

- ZACTION (Zero-Shot Action Generation): Generate multi-step workflows for agents on the fly—no glue code.

- No-Code Multi-Agent Builder: Visual canvas to design agent networks, define triggers, connect tools.

- Security & Governance Built-In: Authorization, audits, isolation, policy enforcement.

This lets teams focus on what agents do—not how they talk to each other or external systems.

How Adopt AI Enhances LangGraph, CrewAI & More

Instead of replacing frameworks like LangGraph or CrewAI, Adopt AI augments them:

- It injects APIs as agent tools automatically

- It scaffolds orchestration edges and command flows

- It provides observability, logging, and access control

- It helps agents scale without collapse under coordination complexity

E.g., in a document analysis pipeline: your LangGraph handles the logic graph, but Adopt AI connects agents to storage APIs, wires dependencies, and secures who can call summarization or extraction tools.

Conclusion

Multi-agent frameworks are fundamentally redefining how intelligent systems interact, coordinate, and execute complex tasks. Their true strength lies in enabling collaboration, scalability, and adaptability key capabilities that are essential for modern enterprises striving for operational excellence.

With powerful enablers like Adopt AI, organizations can accelerate the adoption of multi-agent frameworks in a secure, efficient, and scalable manner. Adopt AI’s automation, orchestration, and governance features empower enterprises to harness the full potential of collaborative agent networks while ensuring compliance and reliability.

As multi-agent systems continue to evolve and proliferate, enterprises that embrace these technologies will be better equipped to innovate, optimize workflows, and deliver superior user experiences in an increasingly complex and dynamic business landscape.

FAQs

Q1: What is a multi-agent framework and why is it important?

A multi-agent framework is a software platform that enables multiple autonomous AI agents to collaborate and coordinate to solve complex tasks more effectively than individual agents. These frameworks are vital for building scalable, resilient, and flexible intelligent systems that automate distributed workflows.

Q2: What are the main challenges in building multi-agent AI systems?

Key challenges include coordination complexity among autonomous agents, difficulty in monitoring and debugging distributed interactions, lifecycle management of interconnected agents, security and compliance concerns in agent communication, and measuring performance accurately in dynamic multi-agent environments.

Q3: What benefits do multi-agent frameworks offer?

They enable modular, specialized agents that can be independently developed and scaled; support parallelism for improved efficiency; ensure fault tolerance by isolating failures; and facilitate adaptive, real-time responses to changing environments.

Q4: What is the best framework for AI agents?

Depends on your needs: LangChain for LLMs, AutoGen/CrewAI for collaboration, LangGraph for workflows, Botpress/Langflow for low-code, and Semantic Kernel for Microsoft ecosystems.

Browse Similar Articles

Accelerate Your Agent Roadmap

Adopt gives you the complete infrastructure layer to build, test, deploy and monitor your app’s agents — all in one platform.

.svg)

.svg)