Discover why mapping your app’s APIs is the essential first step in building agentic experiences and enabling LLMs to take real action.

.png)

What exactly is an 'agentic' application?

Simply put - it's one where users can accomplish tasks and achieve outcomes using natural language commands.

At the heart of these agentic apps is the mechanism of Tool Calling, the critical capability that enables Large Language Models (LLMs) to move beyond mere text generation to actually invoking specific actions and functions within applications.

.png)

And while LLMs typically capture the spotlight, APIs are in fact, the foundational building blocks that underpin this entire tool calling mechanism. Because every meaningful action a user takes within an application - clicking buttons, submitting forms, or navigating complex workflows - is ultimately executed through underlying API calls.

And to replicate and automate these user interactions, agents require direct and structured access to the same API infrastructure, translated into a format that LLMs can reliably understand and work with. Therefore, for applications aiming to become genuinely 'Agentic,' the first critical milestone they must achieve is to comprehensively map, clearly document, and contextually structure their entire API-driven capability landscape. Without this, you’ll have assist and navigation copilots, but not an agent experience for your app that can actually get work done for users.

In this article, we'll dive into the two-pronged challenge that makes it so difficult for apps to map their capability landscape →

- API Discovery - systematically capturing and mapping out what your application can do;

- LLM Readiness - structuring these APIs so they can be easily consumed and orchestrated by language models.

We'll also explore why today's agent-building platforms shift this foundational burden onto product and development teams, leading to significant scaling bottlenecks. And lastly, we'll examine how automated solutions, such as Adopt's Zero Shot API Generation (ZAPI), empower any application to rapidly generate comprehensive, LLM-ready API toolkits, dramatically reducing manual overhead and accelerating the path to becoming agentic.

Why API Discovery is the first step in building an Agentic Application

For an application to become agentic, it needs to do more than just expose a few endpoints. It needs to externalize its entire capability landscape - the complete picture of what the app can do.

This landscape is made up of APIs, the knowledge base, core entities (business objects), frontend URLs, and every other data point that defines the application's functionality. These are the ‘tools’ an agent needs to reason over, understand context from, and ultimately invoke to meet a user’s intent—whether that’s fetching a report, updating a lead, or orchestrating a multi-step workflow.

Among these components, APIs are the most central. They represent the actionable layer of the application—what can be executed, triggered, automated. Without accessible and well-structured APIs, agents have nothing to call. So when teams begin the journey of building agentic capabilities, the real work starts long before prompts or orchestration flows. It starts with building the toolset.

That tool-building process comes down to two key stages : API Readiness and LLM Discovery.

API Discovery

API Discovery is the act of uncovering and mapping out all the real, executable capabilities of your app—often spread across services, teams, and outdated documentation.

LLM Readiness

Once discovered, those APIs need to be transformed—enriched with context, business usage logic, RBAC and auth - so an LLM can reason about when, why, and how to call them. This makes them ‘LLM Ready’.

These two steps form the foundation for any agentic transformation.

But both are riddled with challenges that today’s tooling has largely ignored.

Let’s get into them one at a time.

Why Most Teams Struggle With Mapping Their Own APIs

In theory, API discovery sounds simple enough: create a single source of truth that comprehensively maps all your application’s APIs. One unified place that captures what each endpoint does, where it lives, and how it’s used. But reality is different - most teams, both small scale startups and enterprises alike, do not have their API docs in place.

And there’s many nuanced reasons why this is the case, here’s a few →

- No One Owns API Knowledge Holistically: Different teams own different parts of the app, and no one person or team is responsible for maintaining the complete picture.

- Outdated Documentation: APIs evolve much faster than documentation can keep up. What was true a sprint ago is likely outdated today.

- Internal APIs Aren’t Treated Like Products: Unlike external APIs, internal endpoints rarely get the same level of care or documentation, because they’re assumed to be “known” by the teams that built them.

- Org Structures Reinforce the Fragmentation: Micro services split capabilities across pods or teams. What starts as autonomy becomes opacity.

Now, this lack of documentation leads to serious operational pain:

You have PMs running around Slack chasing engineering teams for clarity on what an endpoint does.

You have new developers duplicating existing functionality because they didn’t know the API already existed.

And worst of all, you have workflows you think the app supports - until your agent hits a wall.

Where Current API Documentation Approaches Fall Short

Even when teams try to solve this, the go-to approaches often fail:

- Manual Processes Are a Time Sink: Assigning someone to comb through codebases, gather Postman exports, and manually document APIs doesn’t scale - especially in fast-moving teams.

- Low Accuracy From Human Oversight: Manual discovery usually gets you 80–85% coverage at best. There’s always an edge case, an undocumented version, or a rogue parameter that slips through.

At this point, you might say: aren’t there tools that already do API discovery?

Yes - there are observability and traffic-monitoring tools that can help index endpoints across your infrastructure.

But even if you do manage to get your API spec in order, you’re only halfway there.

Because the real challenge isn’t just discovering your APIs. It’s making them LLM-ready.

And that’s where things get a whole lot trickier.

Beyond Specs: Structuring APIs for Language Models

Imagine you’re a PM at a spend management application. One of the capabilities you offer is allowing users to view the usage of their purchased software products across different teams.

You have an API that powers this capability—and in your Swagger or Postman files, it looks like this:

GET /usages/teams/{team_id}/products

Headers:

{

"api-key": "{api-key}",

"Content-Type": "application/json"

}

Payload:

{}

Responses:

{}Now, just like any PM or engineer glancing at this raw spec, an LLM will struggle to understand what this API actually does. There’s no business logic. No explanation of when or why it should be used. No real context. To an agent, this is just plumbing.

What Agent-Ready Looks Like

Now here’s what that same endpoint looks like once it’s been made LLM-ready:

GET /usages/teams/{team_id}/products

Name: Team Product Usage Details

Purpose: Retrieve product usage information for a specific team

Context: Part of a usage management workflow that helps organizations analyze software spend and identify underutilized tools

Workflow: One step in a broader insight-gathering process, possibly used alongside budget reports or renewal forecasting

Query Parameters: Supports sorting (e.g., by status) and pagination (e.g., using lastItemId and lastSortId)

Returns: Team-specific product usage data and associated metadata

LLM Prompt Template:

"Retrieve product usage information for a specific team identified by {team_id}. Support sorting by various fields and pagination for efficient navigation."

This is the real difference: not just what an endpoint does—but why, when, and how it should be used. That’s what LLMs need to reason and act. Now, lets get into how the widely used Agent Frameworks out there leave the burden of this tool building onto their users.

How Agent Frameworks Aren’t Turnkey: You Still Have to Build the Toolset

Open-source Agent Frameworks like LangChain, Crew AI, and Autogen are incredibly powerful for building custom agents. But they all share one fundamental assumption: that your APIs are already LLM-ready. These platforms give you orchestration logic, memory management, and prompt handling.

But when it comes to defining what an agent can actually do—you have to do all the heavy lifting.

Take this example by LangChain on How To Build An Agent - The article specifies the manual API wiring and prompt chaining you need to do, to build the simplest of agent workflows.

Let’s say you’re a developer building an internal support agent that can:

- Fetch customer subscription details

- Update billing preferences

- Cancel a plan

To make this agent functional, you first need to:

- Discover the relevant APIs across your app or micro-services

- Manually document their parameters, auth headers, and expected responses

- Write prompt templates or function descriptions that LLMs can interpret

- Wire them into the orchestration logic of LangChain or Crew AI

Here’s what that might look like in LangChain:

from langchain.agents import initialize_agent, Tool

from langchain.llms import OpenAI

# You define the tools manually

def get_subscription_details(user_id):

# Call to your API

...

tools = [

Tool(

name="Fetch Subscription",

func=get_subscription_details,

description="Use this to fetch current subscription info for a user by user_id"

)

]

agent = initialize_agent(tools, OpenAI(), agent="zero-shot-react-description")Now imagine doing this for every capability in your app. Each one needs its own custom wrapper. Its own description. Its own testing and validation. And as your app evolves—new versions, new params—you’re stuck maintaining it all.

When you need to ‘agentify’ your entire app - using these frameworks, there’s 3 key bottlenecks that show up →

- The Problem of Coverage

- You end up exposing only a narrow subset of actions because of the setup overhead.

- Important but less common workflows are skipped.

- The Problem of Time

- Every new action requires writing and testing custom wiring code.

- Building one small agent becomes a multi-week project.

- The Problem of Scale

- Teams can’t keep up as apps grow.

- Orchestration logic and tool definitions don’t scale linearly.

The result? You end up with Agents that are brittle, Toolsets that are shallow and Teams that are blocked by the wiring work instead of shipping powerful agentic experiences for their end users.

And that’s the exact gap we built Adopt’s Agent Builder to close.

How Adopt AI Automates API Discovery and LLM Readiness with Zero Setup

At Adopt AI, we recognized these problems from the get go - and built the Agent Builder Platform with a singular goal: to help product and engineering teams unlock the full capability of their applications—out of the box.

Instead of expecting teams to manually discover, document, and describe every endpoint, we built a proprietary system called ZAPI (Zero Shot API Ingestion) to automate both API discovery and LLM readiness in one seamless pipeline.

What Is ZAPI? A Zero-Shot Way to Map and Structure APIs for AI Agents

Here’s how it works:

Step 1: Simulate Real User BehaviorWe deploy a computer-use agent that systematically navigates your application like a power user—clicking buttons, opening drop-downs, running searches, and performing every workflow possible.

Step 2: Ingest the Capability SurfaceAs this exploration happens, we capture the underlying capability landscape exposed through the application—including APIs, payload structures, and click-result behaviors—across every meaningful workflow.

Step 3: Structure and Categorize Tools for Agent Use

What makes ZAPI powerful isn’t just what it extracts - it’s how it organizes and transforms that data.

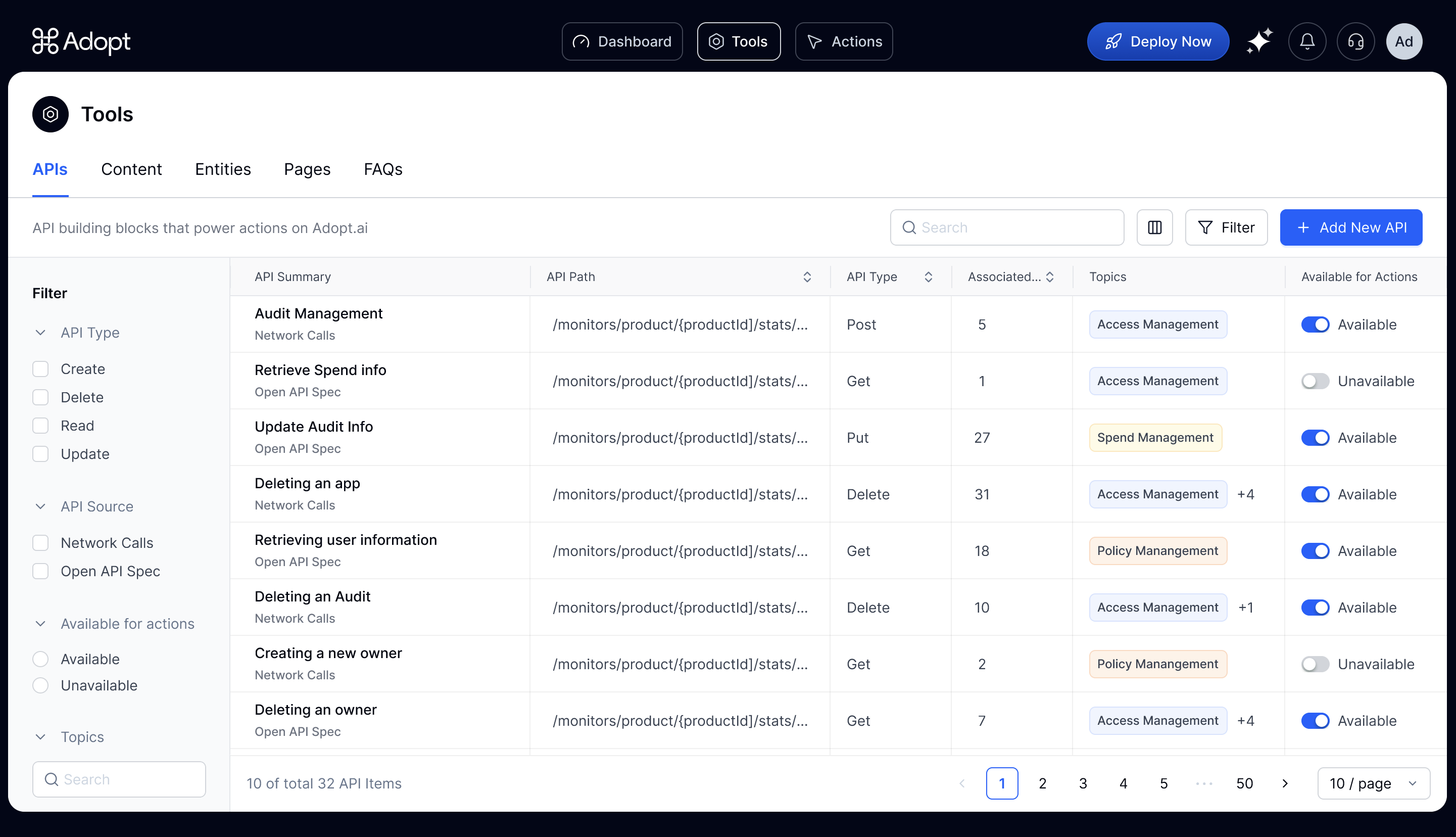

The Agent Builder platform categorizes this intelligence into five distinct tool types →

- APIs: Endpoints discovered and enriched with names, descriptions, sample calls, and prompt examples.

- Entities: Business objects like users, campaigns, tickets—enriched with structured values and relationships.

- Pages: URL routes your app exposes, mapped for navigation and page-based commands.

- FAQs: Extracted knowledge snippets from support documentation to handle common questions.

- Content: Knowledge base articles tied back to specific capabilities.

Together, these components become the toolset from which agent actions are constructed.

It’s this cross-linked, semantically enriched structure that enables LLMs to accurately invoke the right tools to meet the user’s intent.

The Future Is Agentic - And It Starts with Tools

We believe APIs will remain the backbone of agentic systems for the foreseeable future. Without structured, callable capabilities, agents are just talkers - not doers. As more applications move toward autonomous user experiences, the first step will always be the same: map your capabilities and make them consumable by agents.

And the easiest way to do that? Use Adopt AI.

We give your app a complete, LLM-ready toolset—automatically. So you can stop documenting, stop wiring, and start shipping the agentic experiences your users are already asking for.

Browse Similar Articles

Accelerate Your Agent Roadmap

Adopt gives you the complete infrastructure layer to build, test, deploy and monitor your app’s agents — all in one platform.

.png)

.png)

.png)

.svg)

.svg)