Go beneath the surface of agentic AI systems. Discover the hidden design layers—reasoning, recovery, and trust—that shape the invisible interface.

.png)

The shift we didn’t see coming

If the last decade of UX was about simplifying what people see, the next one is about managing what they can’t.

That’s what became clear to me during our recent Adopt AI webinar, Uncovering the Blind Spots: Designing Agentic AI Experiences Beyond the GUI, I had the privilege of moderating a conversation with our UX leader, Edward Chu, who’s been leading design research on how agentic systems reason, recover, and earn trust at scale.

We talked about what it means to design for systems that think—not just respond—and what most teams miss when they move from an AI prototype to a live, agentic product.

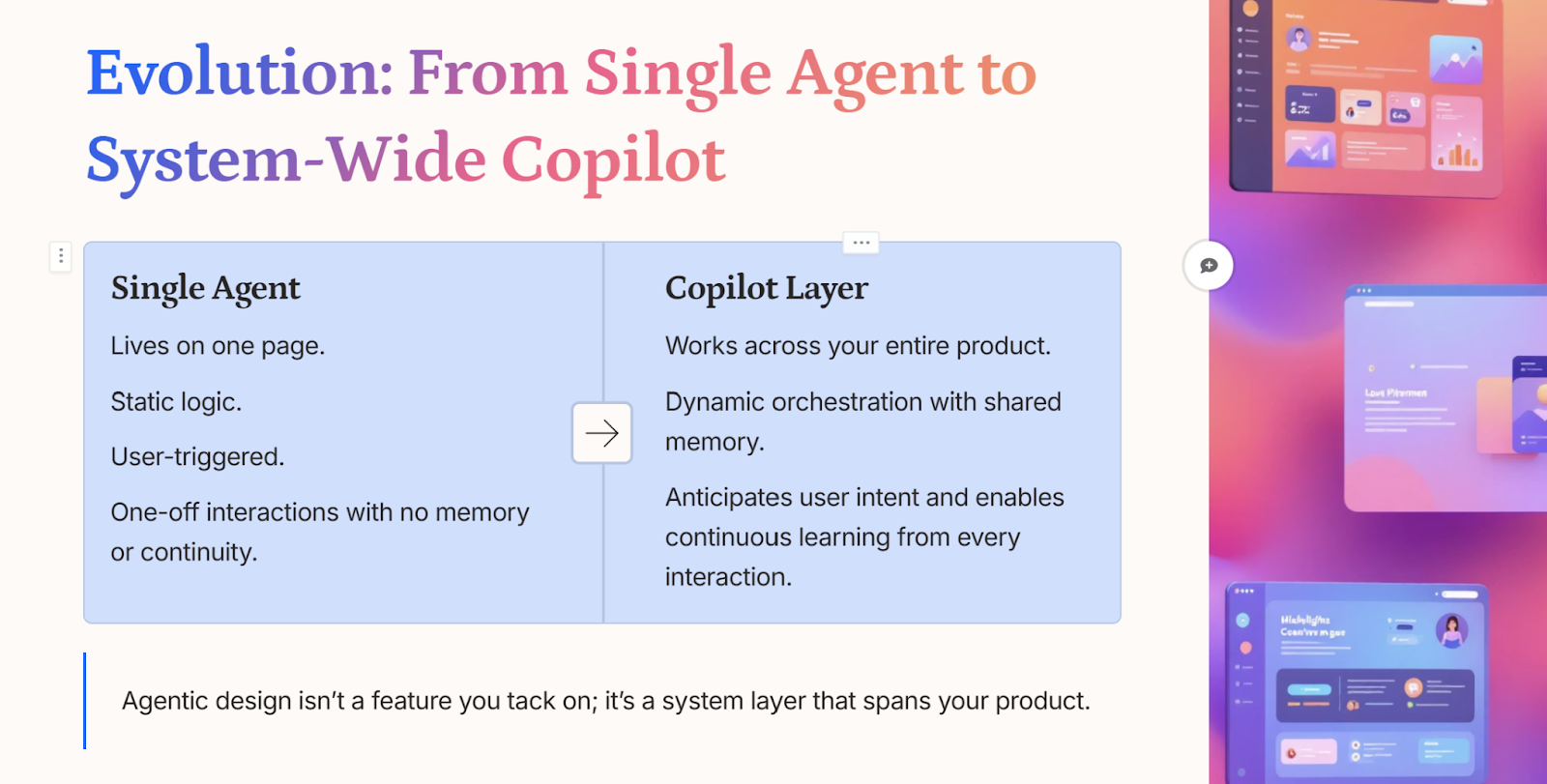

Somewhere between “shipping an agent” and “building an agentic system,” something gets lost. As Edward put it:

“Everyone’s building agents. Few are building systems.”

That idea stuck with me because it captures the tension in this new era of design: it’s not enough to make an AI seem intelligent. It has to behave responsibly.

And that starts by understanding what’s hiding beneath the surface.

The 5 blind spots you can’t design around

When we talk about “agentic design,” the focus tends to be on the shiny parts — the conversational UI, the prompts, the integrations. But what we found in our work (and in this discussion) is that the hardest design problems live underneath: orchestration, observability, recovery, lifecycle, and trust.

These are the five hidden blind spots every team eventually encounters once they move beyond the demo.

1. Orchestration: When reasoning breaks under pressure

Launching an agent is easy. Scaling one that reasons reliably across workflows is where the real work begins.

Too often, orchestration is treated like choreography — a fixed series of steps — instead of what it really is: a living network of dependencies.

As Edward described it, most teams design for success cases instead of designing for the messy, human reality of use. Real systems need to sequence intelligently, recover context, and know when to pause for confirmation. Otherwise, they’ll do the right thing at the wrong time — and lose user trust in the process.

2. Observability: Trust needs a trace

When an AI system acts, people need to understand why. That’s not a developer luxury — it’s a design responsibility.

“When people can’t see how a decision was made, they start assuming it was wrong.” — Edward Chu

Observability bridges that gap. It’s the difference between a black-box experience and a system users can calibrate around.

Think of it like designing a GPS: people don’t just want the destination — they want to know how the system is getting them there. Traces, confirmations, and reasoning cards make the invisible visible. And that visibility builds confidence.

3. Recovery: Designing for when—not if—things fail

Every system fails. The difference between a good one and a great one is how it fails.

Agents that simply stop when something goes wrong feel brittle. The ones that recover — clarifying, retrying, or offering the user control — feel trustworthy.

Designing for recovery isn’t about perfection. It’s about maintaining context and respect for the user’s time and intent, even when the path breaks.

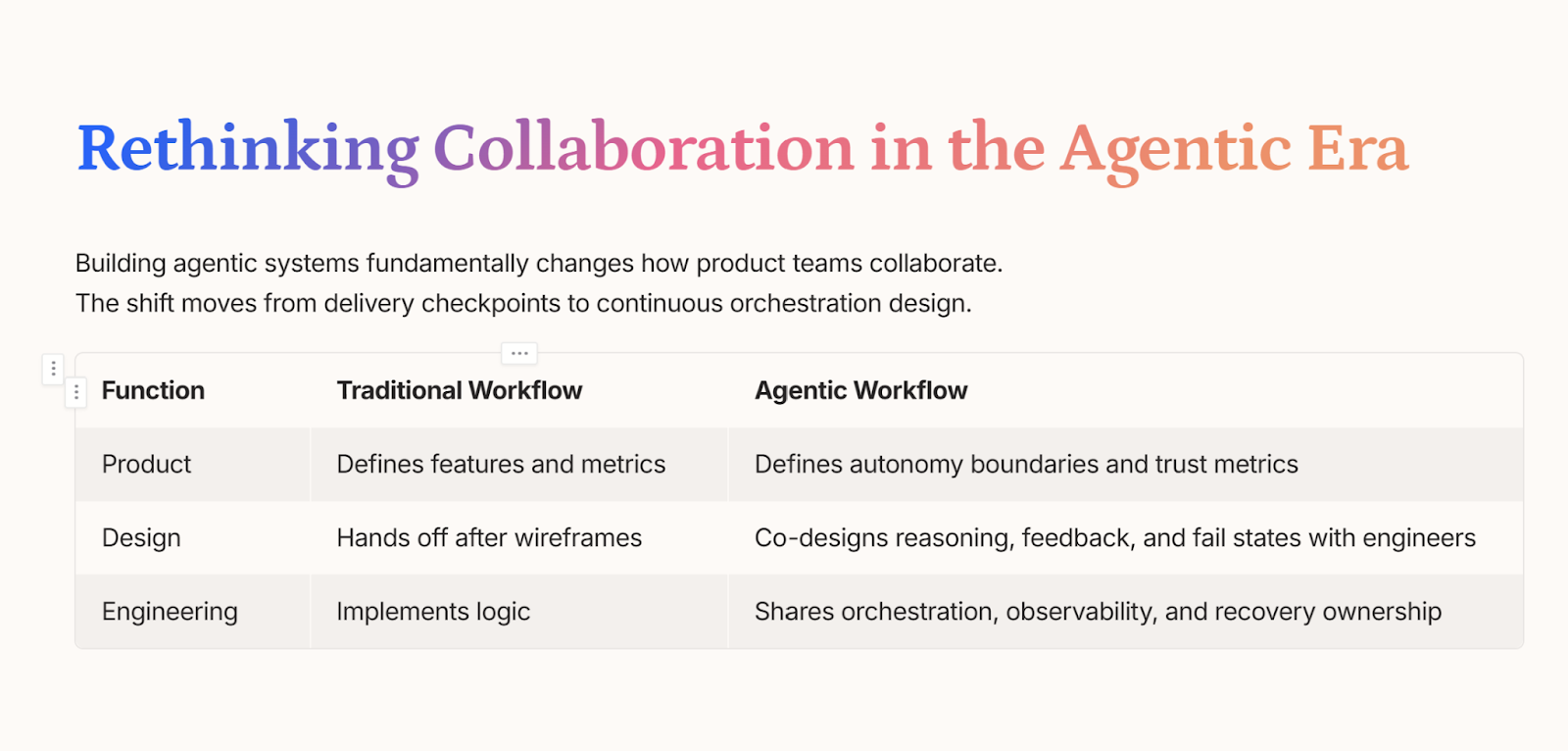

4. Lifecycle & Alignment: Design never stops at launch

Agentic systems don’t live in tidy handoffs. A prompt tweak or a model swap can change behavior across an entire product.

That’s why alignment between Product, Design, and Engineering can’t be episodic. It has to be continuous.

“Every small design tweak or model swap can change how the agent behaves. The collaboration now has to be continuous.” — Edward Chu

At Adopt, we think of this as a shared creative surface — a single feedback loop where design defines reasoning, engineering builds guardrails, and product defines trust metrics. It’s a cultural shift as much as a technical one.

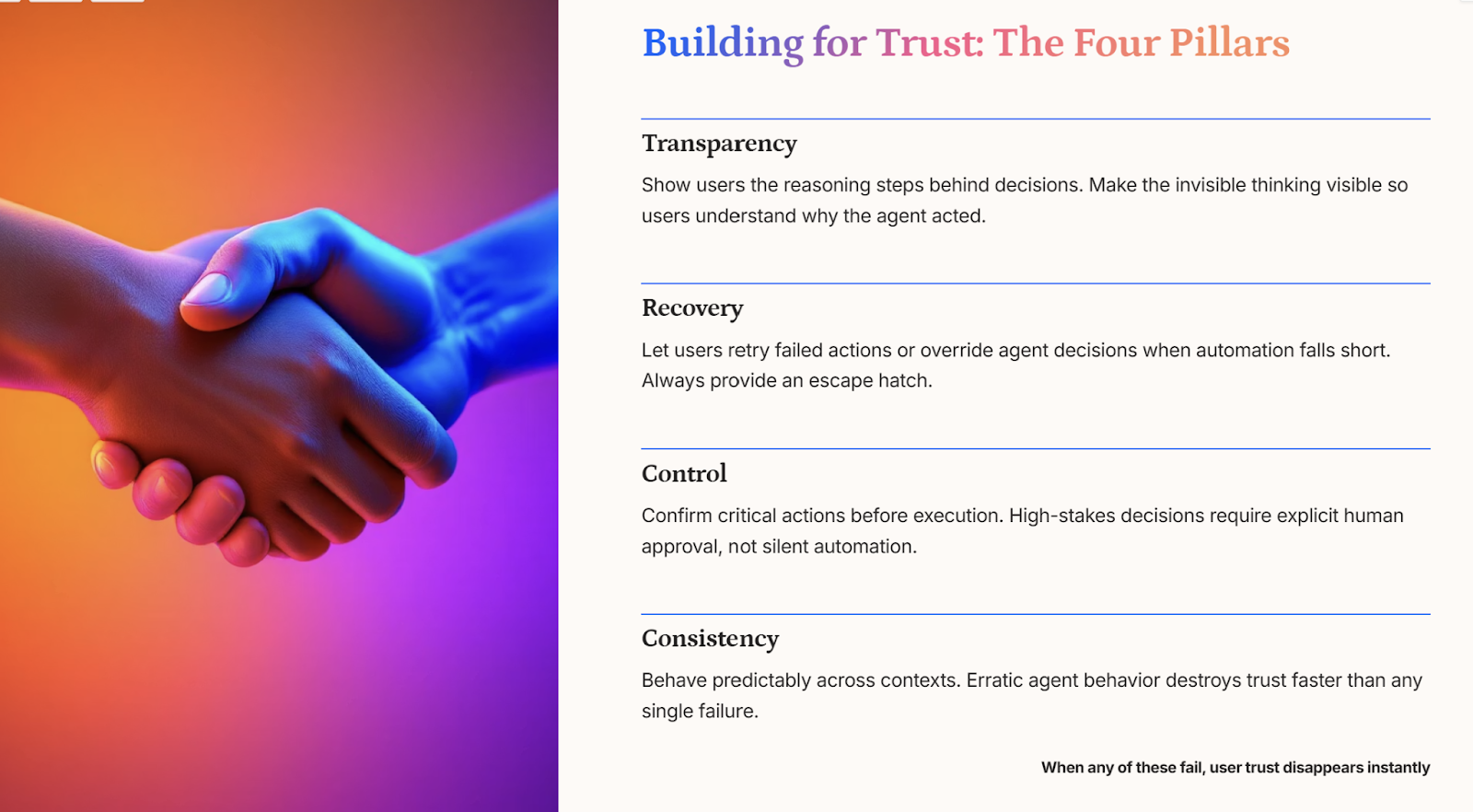

5. Trust: The invisible interface

Trust is the part of the interface you can’t draw. It’s how users feel about what they can’t see. And that means trust has to be designed — not assumed.

At Adopt, we frame it around four operational pillars:

- Transparency — Show how reasoning unfolds.

- Recovery — Allow undo or intervention.

- Control — Confirm before executing critical actions.

- Consistency — Deliver stable results, not surprises.

“The best experience is invisible—because everything just works.” — Edward Chu

In this new era, trust is the new usability.

The Agentic UX Checklist: A living framework for accountability

To close the session, we shared a tool our team uses internally: the Agentic UX System Checklist.

It’s not a design framework. It’s an accountability map — a way to ensure visibility, governance, and recovery are treated as first-class features.

Take a screenshot, save it, or bookmark this article. This list is meant to live beside your next design sprint.

“Make reasoning visible from day one—even if it’s just logging the chain of actions behind the scenes.” — Edward Chu

What this conversation revealed

Moderating this discussion gave me clarity on something I’ve been sensing for months:

We’re witnessing a quiet shift from interface design to intelligence design.

The systems that will define the next decade won’t just look elegant — they’ll think aloud, correct themselves, and invite users into the reasoning process.

That’s what “beyond the GUI” really means.

It’s not about removing the screen — it’s about extending trust beyond it.

“The future of UX isn’t what users see. It’s how systems reason, recover, and earn trust.” — Edward Chu

Missed the session?

If you couldn’t join live, you can watch the full webinar recording here →.

It dives deeper into these blind spots and shows how teams are redefining design, observability, and recovery together.

Continue your agentic design journey

- Take a screenshot or bookmark this article to keep the checklist handy.

- Request a Demo <> to see how Adopt operationalizes orchestration, observability, and trust for enterprise-scale systems.

Because the next era of design isn’t about where users click — it’s about whether they believe what just happened.

Browse Similar Articles

Accelerate Your Agent Roadmap

Adopt gives you the complete infrastructure layer to build, test, deploy and monitor your app’s agents — all in one platform.

.svg)

.svg)

.svg)

.svg)